Python Tools Setup

A while ago I attempted AOC using Python as I needed to learn Python for a change in my role. Now that I’m 18 months into working with Python, I think that getting the AoC repo set up correctly from the beginning would have been a big boost to getting things consistent. In this post we will look at the tools that I would always add to a project and how to set them up. We’ll also look at retrofitting to an existing project.

Setting up a new project

This will be the easiest part by far, there would be no existing code in the project so all the tools that get set up should work immediately, or at least that is the theory. The tools I have chosen are:

The reasons for choosing these tools are covered in more detail below.

pyenv

The first step will be to create a new virtual environment, I’ve chosen pyenv for this as it’s what I am most familiar with. Pyenv allows for the creation of named virtual environments, it provides easy switching between the environments with a single command. Virtual environments are useful when developing many python projects that use different versions of python and different dependency versions, I’ve been in dependency hell in the past and wish I’d known about virtual envs back then.

I also use oh-my-zsh with the zsh shell and the pyenv plugin displays the current env I’m using on the command line, this is very useful for preventing mistakes by me working in the wrong environment.

There are more details on the pyenv installer pages but the command I run to install pyenv is:

1curl -L https://github.com/pyenv/pyenv-installer/raw/master/bin/pyenv-installer | bash

Once the software is installed, I add the following to my .zshrc and then restart the shell

1export PYENV_ROOT="$HOME/.pyenv"

2export PATH="$PYENV_ROOT/bin:$PATH"

3eval "$(pyenv init --path)"

4eval "$(pyenv init -)"

5eval "$(pyenv virtualenv-init -)"

6

7plugins=(git pyenv) # pyenv added to my list of available plugins

This is a one-time operation so unless you change machines or uninstall the software it will never need to be run again.

Next step is to install a version of python into pyenv. For this demo I will use the latest 3.11 version which is 3.11.5, 3.12.0 has been released but only recently so I haven’t had time to checkout this version yet.

1pyenv install 3.11.5

With a new Python version available to pyenv we need to create a new virtual environment using that version:

1 pyenv virtualenv 3.11.5 barebones

Now switch to the new virtual environment

1pyenv activate barebones

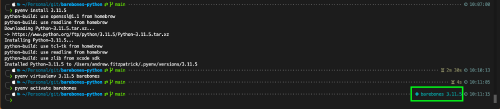

When the environment is activated, the environment name should be displayed in the terminal:

Dependencies

This section will discuss the dependencies I would install in a barebones project, what those dependencies are for and why I would use them. This section also assumes that you have the virtual environment related above activated.

pip-tools

The first dependency is one that we need to install manually: pip-tools. This package makes it easier to manage the project dependencies so to achieve deterministic builds, we can essentially freeze the dependency versions for a build and roll them out without having to worry about version updates introducing bugs. The command to install this tool is:

1python -m pip install pip-tools

We now need to configure the project so that our dependencies files can be compiled. I always create at least 2 requirements files, requirements.in and requirements-dev.in, I do this so that the first file can contain all the runtime dependencies that my application has. The second file links to the built runtime dependencies and also contains the development dependencies my application has. For now our runtime dependencies file (requirements.txt) will be empty and our development dependencies file will contain:

1-r requirements.txt

2-c requirements.txt

Note that the above doesn’t point to a .in file but a .txt file, this is because we need to compile our dependencies and our development dependencies are built on the compiled runtime dependency file.

To compile our dependencies we need to firstly compile our runtime dependencies:

1pip-compile --allow-unsafe --no-emit-index-url --resolver=backtracking requirements.in

And then compile our dev dependencies:

1pip-compile --allow-unsafe --no-emit-index-url --resolver=backtracking requirements-dev.in

We see that there are 2 files created in the project directory, both ending .txt. These are the autogenerated dependency files that contain the dependencies of the dependencies and should not be edited manually.

Now we will install the dependencies that we have for the project

1pip install -qr requirements-dev.txt

Note we only need to install the dev dependencies locally as this will also install the runtime dependencies. The next few steps will add some build tools to our project.

pre-commit

The first tool we will add is pre-commit. Pre-commit adds commit hooks which run when the contributor commits files to git, if any of the configured jobs fail then the commit cannot happen. This is a useful way to ensure quality in the project. I use pre-commit with tools to format code and also check for common problems.

To add pre-commit we need to run through the following steps:

- Add the pre-commit dependency to

requirements-dev.in - Compile the requirements

- Install the requirements

- Add a

.pre-commit-config.yamlfile containing some config. I add the following:1fail_fast: true 2default_stages: [pre-commit, pre-push] 3minimum_pre_commit_version: 3.5.0 4 5repos:

NOTE:- the repos section is empty because we’re not adding any pre-commit jobs yet. The means that the project cannot be committed yet, we will need to add a repo to the pre-commit config and then we can commit. This is fine though as the next step is to add Black.

Black

Black is an uncompromising code formatter, this means that it is opinionated and will enforce a code structure that is compliant with PEP. You don’t get many options for configuring code layout if you use Black. Using Black means more consistency for the project, smaller PR diffs, less cognitive load for the engineers working in the code and less bike-shedding about indents/spacing etc, update as you like and then the code will get formatted on commit.

To add Black we need to run through the following steps:

- Add the Black dependency to

requirements-dev.in - Compile the requirements

- Install the requirements

- Add Black config to the

pyproject.toml - Add Black config to

.pre-commit-config.yaml

Ruff

Ruff is a super fast linter that is almost on par with flake8, it’s close enough for my purposes. I use linters to make sure the code is compliant with standards and to also weed out some of the bugs that can exist in our application. Ruff also implements some of the other tools that might traditionally be used in python projects to perform the same functions faster.

To add Ruff we need to run through the following steps:

- Add the Ruff dependency to

requirements-dev.in - Compile the requirements

- Install the requirements

- Add Ruff config to the

pyproject.toml - Add Ruff config to

.pre-commit-config.yaml

iSort

iSort keeps imports organised, this helps at PR time to spot which new dependencies are being added to a file. It’s a long standing convention of having imports stored at the top of the file and organised in some way, iSort enforces this for python.

To add iSort we need to run through the following steps:

- Add the iSort dependency to

requirements-dev.in - Compile the requirements

- Install the requirements

- Add iSort config to the

pyproject.toml- make sure you increase line length to match Black and set the profile to match Black. - Add iSort config to

.pre-commit-config.yaml

pre-commit pt2

Now that we have our linters and formatters installed and configured, we can install them into pre-commit. We can do this by running the command:

1pre-commit install

After this point we can commit code to git as we want to because there are some tools for pre-commit to run

Pytest

Pytest is a unit test framework for Python. It works in much the same way as any other unit testing framework - test files are discovered and executed when running the pytest command. I also add an additional library for calculating the test coverage, this enables a minimum level of coverage to be set and means the test status will be failed if this minimum level isn’t reached.

- Add the pytest and pytest-conv dependencies to

requirements-dev.in - Compile the requirements

- Install the requirements

- Add pytest config to the

pyproject.toml

I don’t add pytest as a pre-commit job, this is because test suites can take a long time to run, I’d rather offload this time to a build server.

GitHub Workflow

Once all the build tools are set up, I know that any commits will be in the correct format and should be free of some common bugs. The final step of setting up the project is to add a GitHub workflow that can run the unit tests on commit, this means I don’t have to wait for a whole test suite to complete before I commit code, I can run the tests for the code I’ve changed locally and then commit.

Setting up a GitHub workflow is very simple:

- Create a

.githubfolder in the root of the project - Create a

workflowsfolder in the.github - Create a workflow file in the

workflowsdirectory. I always call minebuild-and-test-project.yml

Once you’ve pushed this file to GitHub, it will be executed every subsequent time you push to main. The setup I have linked above ensures a minimum coverage of 80%, I think this is a good level.

Retrofitting the tools to an existing project

Luckily I had my AOC project that didn’t have any kind of build pipeline for it so I’ve used it as a test for retrofitting build tools. I thought the process would be very involved and make for an interesting post but it was actually very straight forward.

Here are the steps I followed (they are basically the same as above):

- Set up venv for

aoc-2021 - Install pip-tools then compile and install the dependencies

- Move files to

srcandtestdirectories. This was simple but mandraulic, source files were easy but I had to create tests for each source file. This turned into a pain as all files were in different formats so it took quite a long time to complete but I got there eventually. This commit shows all the changes I had to apply - Added pre-commit

- Added Black to the project

- Added Ruff to the project

- Added iSort to the project

- Added pytest to the project

- Added a github build workflow

- Committed all the changes, pre-commit hooks kicked in and formatted code - this eas uneventful and I ended up with only 1 issue I had to fix manually and that was because of an unused import.

That’s it, 2 years after setting up the repo I now have some build tools and a build pipeline set up for it.